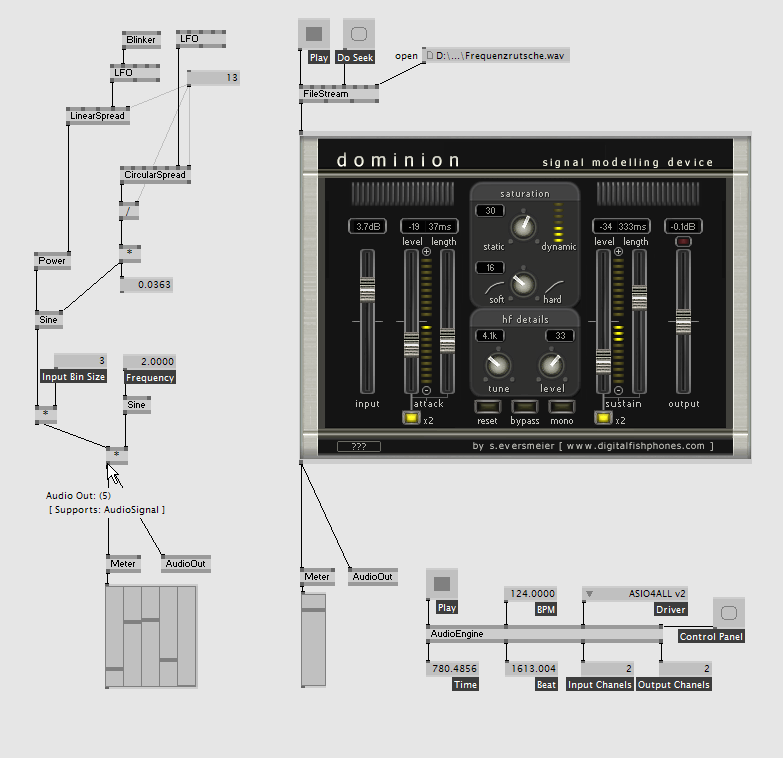

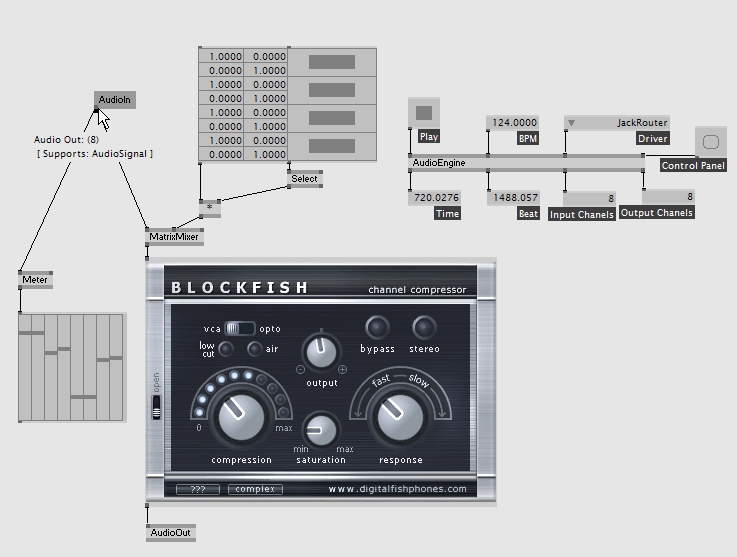

i have quite a task in front of me. we will develop (with the help of other coders) an audio engine with modular audio processing modules which can be connected in a signal flow graph. similar to max/msp, pure data, bidule, audiomulch, CLAM and so on. but more focused on having VST plugins as nodes in the signal flow graph as well as low level nodes like multiply, add, routing and even code templates which compile in realtime. so some kind of modular DAW without the need of metaphors like channel, send, return and stuff like that... total free routing.

the good part is, that the graph building framework is already there, as well as a GUI mainloop and everything else... only thing i have to code is the audio engine (with a time clock), the audio signal data type, midi/time events and so on...

specially people who have experience with audio engines and their architecture would be very helpful at this stage. the first steps are not about audio algorithms, but about stuff like:

- - what kind of calculations should be done in the audio interrupt and what can be done elsewhere, what kind of scheduling is commonly used?

- how are time, bpm and note events handled and triggered in an optimal way?

- how is data transfered from and to the GUI mainloop with least possible latency?

- how to handle plugin/algorithm latency

- how to minimize latency overall

- how do GUI elements handle latency? how is for example a cursor which runs over a soundfile synchronized with the audio that you actually hear right now.

- ...

as audio library i am using NAudio which is C#/.NET style and i used the ISampleProvider interface as a start.

so far the signal format is 32-bit float -1..1 and the base class of an audio processor is something like this:

Code: Select all

class AudioSignal

...

protected AudioSignal FUpstreamProvider; //the input node

protected object FUpstreamLock = new object();

protected float[] FReadBuffer = new float[1];

private bool FNeedsRead = true; //will be set to true after each graph evaluation

public int Read(float[] buffer, int offset, int count)

{

FReadBuffer = BufferHelpers.Ensure(FReadBuffer, count);

if(FNeedsRead) //only first call per frame

{

this.CalcBuffer(FReadBuffer, offset, count);

FNeedsRead = false;

}

for (int i = 0; i < count; i++) //each call

{

buffer[offset + i] = FReadBuffer[i];

}

return count;

}

protected virtual void CalcBuffer(float[] buffer, int offset, int count) //subclasses override this method

{

lock(FUpstreamLock)

{

if(FUpstreamProvider != null)

FUpstreamProvider.Read(buffer, offset, count);

}

}Code: Select all

public class SineSignal : AudioSignal

{

public SineSignal(double frequency)

: base(44100)

{

Frequency = frequency;

}

private double Frequency;

public double Gain = 0.1;

private double TwoPi = Math.PI * 2;

private int nSample = 0;

protected override void CalcBuffer(float[] buffer, int offset, int count)

{

var sampleRate = this.WaveFormat.SampleRate;

var multiple = TwoPi*Frequency/sampleRate;

for (int i = 0; i < count; i++)

{

// Sinus Generator

buffer[i] = (float)(Gain*Math.Sin(nSample*multiple));

unchecked //;-)

{

nSample++;

}

}

}

}best,

tf