How does Multicore works on U-He Synths? still a mystery

- u-he

- 28063 posts since 8 Aug, 2002 from Berlin

I think that posting my findings here is a bit of therapy for a shitty day the other day.

Also, as I said before, this is a recurring thing, partly regurgitated by people who seem to entertain multiple accounts, as far as my mod powers suggest. Happens, I don't know, twice or thrice a year, so maybe in future I'll just reply with a link to this topic.

Also, as I said before, this is a recurring thing, partly regurgitated by people who seem to entertain multiple accounts, as far as my mod powers suggest. Happens, I don't know, twice or thrice a year, so maybe in future I'll just reply with a link to this topic.

-

- KVRAF

- 2023 posts since 23 May, 2012 from London

- Banned

- 2288 posts since 24 Mar, 2015 from Toronto, Canada

thanks for this great post Urs. Just a question, what is the correct or proper way to check CPU usage of a VST ? Is there a recommended correct way. I get the impression many are not checking correctly. should we start with one oscillator and work upwards from there or should we start with a default init of the VST ?Urs wrote: ↑Mon Mar 15, 2021 8:18 amThank you so much!

Developers are always confronted with comparisons of their products to that of others. There is never a good answer to these, since pointing out strengths of one's own product might seem like bagging someone else's products. So we can't really make such a video ourselves.

I have actually become very careful in how I mention other synthesisers since I've been accused of bullying for saying out loud that I disliked the factory presets of a certain vintage hardware synthesiser (Alesis A6, if you need to know).

Anyhow, what you show is a good example of what I meant to say about optimisation. It is only really worth investing considerable effort when the result is obvious and foreseeable enough. If, say, an 8x unison oscillator uses, say, 10% CPU while 4x unison uses 5%, I would assume that 1x goes down below 2%. That's an optimisation I would certainly go for, since it scales proportionally. But if 8x uses 10% while 4x uses 8%, I'd assume that the step from 4x to 1x may be too minimal to pursue. In fact, the logistics behind the optimisation itself may make things worse.

Now for some technobabble: Optimizing Hive's oscillators for 1x/2x unison may involve templating and specialising the algorithm. This leads to duplication of code blocks with little variances. This in turn means that memory access for executable code multiplies, as multiple version of the algorithm may need to be loaded into memory. This may be good for laboratory settings, but in the real world it easily degrades performance overall.

To further fuel things, it is our experience that SSE-like parallelisation ("4 at once") really only ever halves CPU. That is, we "get 4 for the price of 2". Therefore, there may only be a path to ever optimise for 1x oscillators as 2x will already perform exactly like 4x, and the outcome will be nowhere near 1/4 of the CPU. The fact that unison does not scale proportionally means that there isn't only time spent on actual playback - there is also time spent on logistics behind shared parameters, such as tune, modulation and mixing. Factoring this in, I think a 1x Hive oscillator would probably consume 0.12% instead of 0.16% which is nowhere near the 0.04% that one would naively expect.

These are some of my thoughts in regards to further optimisation of Hive. Not processing an oscillator at all if it isn't selected in a filter input is a separate topic, and one that I might actually pursue.

Spotify

Spotify  Soundcloud

Soundcloud  Soundclick

SoundclickGear & Setup: Windows 10, Dual Xeon, 32GB RAM, Cubase 10.5/9.5, NI Komplete Audio 6, NI Maschine, NI Jam, NI Kontakt

-

excuse me please excuse me please https://www.kvraudio.com/forum/memberlist.php?mode=viewprofile&u=427648

- KVRAF

- 1631 posts since 10 Oct, 2018

- u-he

- 28063 posts since 8 Aug, 2002 from Berlin

You just load a preset. If you need an init preset, make one called "my init" or something.

Once a preset is loaded, the preset name hides the version and registration info.

- u-he

- 28063 posts since 8 Aug, 2002 from Berlin

I don't think there's a proper rule, and it's nearly impossible to know how DAWs/hosts and plug-ins actually conclude the CPU usage.telecode wrote: ↑Wed Mar 17, 2021 12:00 pmthanks for this great post Urs. Just a question, what is the correct or proper way to check CPU usage of a VST ? Is there a recommended correct way. I get the impression many are not checking correctly. should we start with one oscillator and work upwards from there or should we start with a default init of the VST ?

As you can see with Hive, it's not always possible to judge overall CPU usage from isolated settings. Hive simply has a "base consumption" from which it doesn't increase much under full load. Whereas other synths, e.g. Zebra, are built upon an infrastructure that starts low, but then builds up into a CPU hog.

Likewise, there is CPU usage and then there is CPU usage. Are we measuring overall median performance or are we measuring maximum performance? Not sure if any such information is given for any host software often used in such comparisons.

Hive for instance can play more voices than are selected for a very short time, whenever notes overlap. This is done to minimise clicking sounds. Other synthesisers may not render such "ghost voices" but they're maybe prone to small artefacts or exhibit jitter (Hive does that, too, when the number of voices is completely exhausted). Our strategy may cause a short period of a few milliseconds of extra CPU "for the cause". If a host displays CPU merely based on maximum performance, it may look higher than the overall performance of a synth that takes other compromises - when set to the same number of voices.

I recently came across an edge case where CPU performance seemed to spike because of buffer size. If an event, let's say a NoteOn, shortly adds 10x CPU for a single sample, pressing chord will have big CPU impact with a small buffer size, but it's almost invisible on a large buffer. So if a host sometimes processes 1 sample (some do) and measure the CPU, it may seem like a very big spike that in real buffers (hardware latency) is not there.

Some people measure performance using e.g. top or CPU Monitor. What they see is also what happens in the UI and in background threads. Those are typically low priority and say not so much about maximum performance possible.

- KVRAF

- 25416 posts since 3 Feb, 2005 from in the wilds

That is why I find adding instances until crackling a decent practical test for real world performance and which removes the cpu meter from the equation.Urs wrote: ↑Wed Mar 17, 2021 12:51 pmI don't think there's a proper rule, and it's nearly impossible to know how DAWs/hosts and plug-ins actually conclude the CPU usage.telecode wrote: ↑Wed Mar 17, 2021 12:00 pmthanks for this great post Urs. Just a question, what is the correct or proper way to check CPU usage of a VST ? Is there a recommended correct way. I get the impression many are not checking correctly. should we start with one oscillator and work upwards from there or should we start with a default init of the VST ?

As you can see with Hive, it's not always possible to judge overall CPU usage from isolated settings. Hive simply has a "base consumption" from which it doesn't increase much under full load. Whereas other synths, e.g. Zebra, are built upon an infrastructure that starts low, but then builds up into a CPU hog.

Likewise, there is CPU usage and then there is CPU usage. Are we measuring overall median performance or are we measuring maximum performance? Not sure if any such information is given for any host software often used in such comparisons.

Hive for instance can play more voices than are selected for a very short time, whenever notes overlap. This is done to minimise clicking sounds. Other synthesisers may not render such "ghost voices" but they're maybe prone to small artefacts or exhibit jitter (Hive does that, too, when the number of voices is completely exhausted). Our strategy may cause a short period of a few milliseconds of extra CPU "for the cause". If a host displays CPU merely based on maximum performance, it may look higher than the overall performance of a synth that takes other compromises - when set to the same number of voices.

I recently came across an edge case where CPU performance seemed to spike because of buffer size. If an event, let's say a NoteOn, shortly adds 10x CPU for a single sample, pressing chord will have big CPU impact with a small buffer size, but it's almost invisible on a large buffer. So if a host sometimes processes 1 sample (some do) and measure the CPU, it may seem like a very big spike that in real buffers (hardware latency) is not there.

Some people measure performance using e.g. top or CPU Monitor. What they see is also what happens in the UI and in background threads. Those are typically low priority and say not so much about maximum performance possible.

-

machinesworking machinesworking https://www.kvraudio.com/forum/memberlist.php?mode=viewprofile&u=8505

- KVRAF

- 6209 posts since 15 Aug, 2003 from seattle

I do too, but it gets, weird?pdxindy wrote: ↑Wed Mar 17, 2021 2:49 pmThat is why I find adding instances until crackling a decent practical test for real world performance and which removes the cpu meter from the equation.Urs wrote: ↑Wed Mar 17, 2021 12:51 pmI don't think there's a proper rule, and it's nearly impossible to know how DAWs/hosts and plug-ins actually conclude the CPU usage.telecode wrote: ↑Wed Mar 17, 2021 12:00 pmthanks for this great post Urs. Just a question, what is the correct or proper way to check CPU usage of a VST ? Is there a recommended correct way. I get the impression many are not checking correctly. should we start with one oscillator and work upwards from there or should we start with a default init of the VST ?

As you can see with Hive, it's not always possible to judge overall CPU usage from isolated settings. Hive simply has a "base consumption" from which it doesn't increase much under full load. Whereas other synths, e.g. Zebra, are built upon an infrastructure that starts low, but then builds up into a CPU hog.

Likewise, there is CPU usage and then there is CPU usage. Are we measuring overall median performance or are we measuring maximum performance? Not sure if any such information is given for any host software often used in such comparisons.

Hive for instance can play more voices than are selected for a very short time, whenever notes overlap. This is done to minimise clicking sounds. Other synthesisers may not render such "ghost voices" but they're maybe prone to small artefacts or exhibit jitter (Hive does that, too, when the number of voices is completely exhausted). Our strategy may cause a short period of a few milliseconds of extra CPU "for the cause". If a host displays CPU merely based on maximum performance, it may look higher than the overall performance of a synth that takes other compromises - when set to the same number of voices.

I recently came across an edge case where CPU performance seemed to spike because of buffer size. If an event, let's say a NoteOn, shortly adds 10x CPU for a single sample, pressing chord will have big CPU impact with a small buffer size, but it's almost invisible on a large buffer. So if a host sometimes processes 1 sample (some do) and measure the CPU, it may seem like a very big spike that in real buffers (hardware latency) is not there.

Some people measure performance using e.g. top or CPU Monitor. What they see is also what happens in the UI and in background threads. Those are typically low priority and say not so much about maximum performance possible.

Diva in the setting right below divine fits perfectly into my 2x6 core 3.33ghz Mac Pro, in Bitwig. What I mean is this distinct combination seems to use all of the CPU of a single core, the math is just much more linear than any other VSTi. So heavy CPU using Diva in combination with Bitwig for whatever reason outperforms other DAWs, and acts closer to a regular VSTi in that specific configuration. Outside of that Bitwig with other VSTi's acts like Live, it's real time processing CPU costs roughly 30% VS DP, Logic, Reaper etc.

-

- KVRAF

- 2897 posts since 3 Mar, 2006

Bitwig does the right thing imo for measuring CPU use - "how far ahead of time are the buffer calculations finished" because that's a no-nonsense application where the 100% wall is exactly where artifacts will appear. It does however mean that it does not get into measuring "per core" so if you use a non-multicore plugin with a really intense patch it will show as 80% CPU usage but that's really only one core running at 80% so if you duplicated that 3 more times on a 4 core CPU it wouldn't move.

-

machinesworking machinesworking https://www.kvraudio.com/forum/memberlist.php?mode=viewprofile&u=8505

- KVRAF

- 6209 posts since 15 Aug, 2003 from seattle

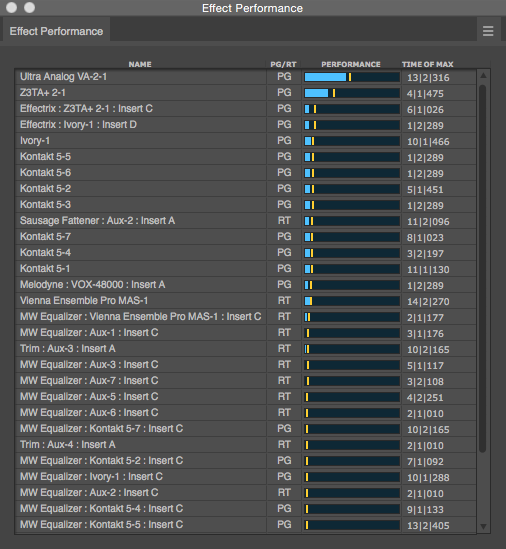

Yeah this is where my old school DAW Digital Performer does it right. Every plug in is individually calculated in the Effect Performance window, and of course a single meter for all CPU use. VS Bitwig with only a single meter, (although the fact it's a running graph is fantastic), or Logic which has separate meters per core but doesn't display the effects of plug ins on CPU use. In the case of DP it also lets you know which plug ins are not buffered or "pre rendered", which isn't really a thing in Bitwig and Live becuase of the real time performance nature of them.MitchK1989 wrote: ↑Wed Mar 17, 2021 6:50 pm Bitwig does the right thing imo for measuring CPU use - "how far ahead of time are the buffer calculations finished" because that's a no-nonsense application where the 100% wall is exactly where artifacts will appear. It does however mean that it does not get into measuring "per core" so if you use a non-multicore plugin with a really intense patch it will show as 80% CPU usage but that's really only one core running at 80% so if you duplicated that 3 more times on a 4 core CPU it wouldn't move.

Last edited by machinesworking on Wed Mar 17, 2021 8:14 pm, edited 1 time in total.

-

machinesworking machinesworking https://www.kvraudio.com/forum/memberlist.php?mode=viewprofile&u=8505

- KVRAF

- 6209 posts since 15 Aug, 2003 from seattle

Using my double post to say one big thing in favor of Bitwigs graph is you can actually see that certain plug ins have CPU spikes, which is a possible help if the developers of said plug in can use that information to stop the issue in Bitwig.