I’m currently modeling a Big Muff Pi fuzz stompbox. More exactly, the one I’ve got at hand, a “Green Russian Tall Font” version. It was my first guitar pedal back in the 90’s and it’s still in a relatively good shape. I would need some help or advices form experimented people here, but first I’m going to present the details of this project. Just tell me if I did something wrong.

My goal is to use the emulation on a Raspberry Pi 4 which is the audio processor of my own pedalboard. I’m not sure if it will have enough processing power to manage the BMP along with other effects, but I’ll try.

Of course the results or products I would come up with will be free and open source, so feel free to reuse or modify anything. For the circuit simulation, I’ve set up a nodal DK-method program. More on this later.

The BMP input stage is of quite low impedance for a guitar device, so the output impedance of the previous element in the chain (a guitar, another pedal or buffer) may have a significant effect on the sound. I deliberately chosen to use low-output impedance signals (as the one coming from a buffer) for all the measurements and simulations. The reason is that there are as many different output impedances as different guitars, and it seems that people generally don’t find much difference in the BMP tone with or without buffer, unlike some other fuzz pedals like the Fuzzface.

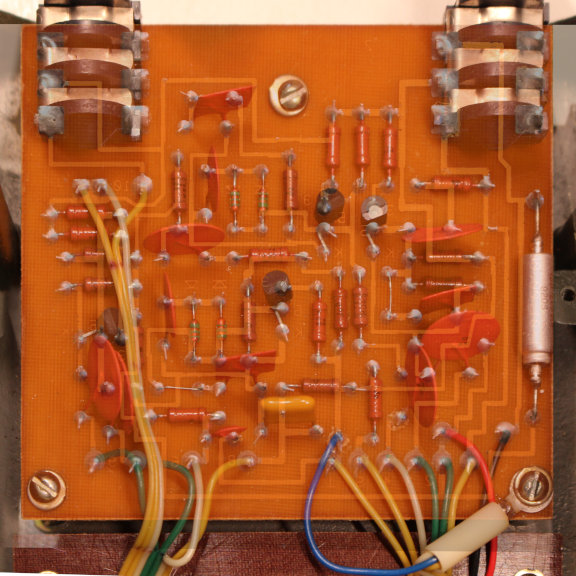

Overlaying pictures of both sides of the PCB helps to identify the parts

I did some initial measurements. I ran a test signal through the pedal and recorded it at different points in the circuit. I repeated with different settings. Recording were done with an audio interface, a Fireface UCX. To minimize any perturbation on the BMP and saturation on the interface side, I built a high impedance buffer and attenuator and inserted it between the measurement point and the audio interface input. It is not totally transparent (there’s a -90 dB rumble from its power supply), but it should be OK. Recording were done in 24 bits 88.2 kHz. Input signal is about 1 V of nominal amplitude (2 V peak-to-peak), and repeated at -20, -40 and -60 dB. It is made of a 10-octave sweeping sine and some other waveforms (saws in both directions and square). I think this covers most of I need to achieve a decent emulation. I probably could have done this with my oscilloscope but I think this way is more convenient.

Measurements can be downloaded here. Files are named with the three knob values (d = distortion, t = tone, v = volume) from 0 (CCW) to 1 (CW) and the measurement point (q2c = transistor Q2 collector). There is also the buffer recorded alone, for scaling purpose and to check the whole recording chain perturbations (noise, mains hum, DC removal, etc).

I also read the bias voltages for all transistors:

Code: Select all

Q4 Q3 Q2 Q1

V_C 4.60 4.42 4.74 5.70

V_B 0.756 0.749 0.739 1.517

V_E 0.1515 0.154 0.1329 0.928I modeled the whole circuit according to this schematics. There are some minor differences, the base-collector feedback caps are single 510 pF (with wires on the second cap locations), and the transistors are labelled “EE1”. The pots are labelled “CП4-2M D7 18T 150K” excepted for volume with D9 instead of D7, so I guess they are 150 kΩ.

The DK-method simulation runs way too slowly at the moment, but it is a lesser concern for now. The results are quite close to the SPICE one, but not close enough from the original. Of course, it was expected. Now I’m trying to tweak the model, stage by stage, to match the BMP as close as possible. This is where I need some help.

I’m working on the input stage. I modified some resistor and capacitor values so it reacts well. But I stumble on something I don’t understand. With low signals (-40 dB, the third one, starting at 3:27 on the measurement samples), the original BMP input stage exhibits some light distortion, with almost only odd hamonics. The SPICE simulation does the same, but mine has all the harmonics (and probably too much of them). I tried to tweak the part parameters to no avail. I use simple stateless models for the diodes and transistors (Shockley and Ebers-Moll), so I guess the difference comes from here. But before I try to implement much more complex models like Gummel-Poon, anyone has an idea about what could cause the small-signal distortion to be more or less symmetrical instead of asymmetrical?

Thank you, sorry to be a bit long